Endless Possibilities

The power of a Genetic Algorithm is its use of seemingly endless possibilities. The possibilities arise from two areas:

1) The depth of each program tree: more nodes → more functions → additional possibilities.

2) The number of generations it has to evolve.

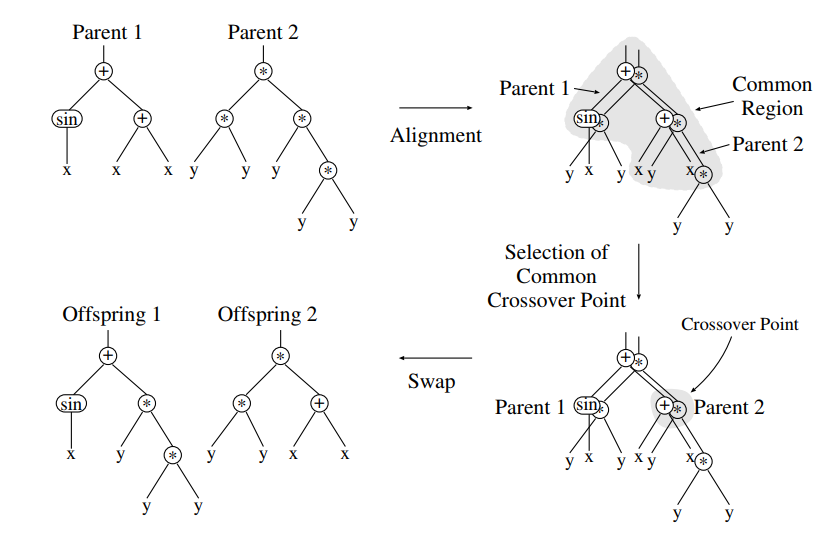

I experimented with different maximum depths for the program trees, between 10 nodes deep to 15 nodes deep (1024-32,768 nodes). Surprisingly the change in the maximum depth of the nodes did not impact the final score. I now understand that after a certain depth, the randomness of the algorithm makes it hard for a large scale program to reach better results than smaller programs. There is always a chance that some random function in a specific node destroys all the calculation that was done below it, unfortunately the random switching done in mating just isn't a strong enough tool to remove all of these rogue nodes.

My initial thought when starting this project was that having large scale programs and have them evolve thousands of times will eventually create the optimal program to solve this game.

Reality

However, because the learning of a program is only done by randomly picking functions between its parents, without any understanding or learning what are mistakes, no great achievements were reached.

Results

My first attempt required the program to return the specific column in which it intends to put the curr_disc (modulo by 7, in order to insure the disc is inserted in a legal column(0-6)). The algorithm quickly entered a local optimum and never progressed. I believe that the randomness of the functions made for this sort of calculation to be extremely hard; considering how a simple +2 or -1 at the end of a program tree would change the final answer completely.

"All problems in computer science can be solved by another level of indirection."

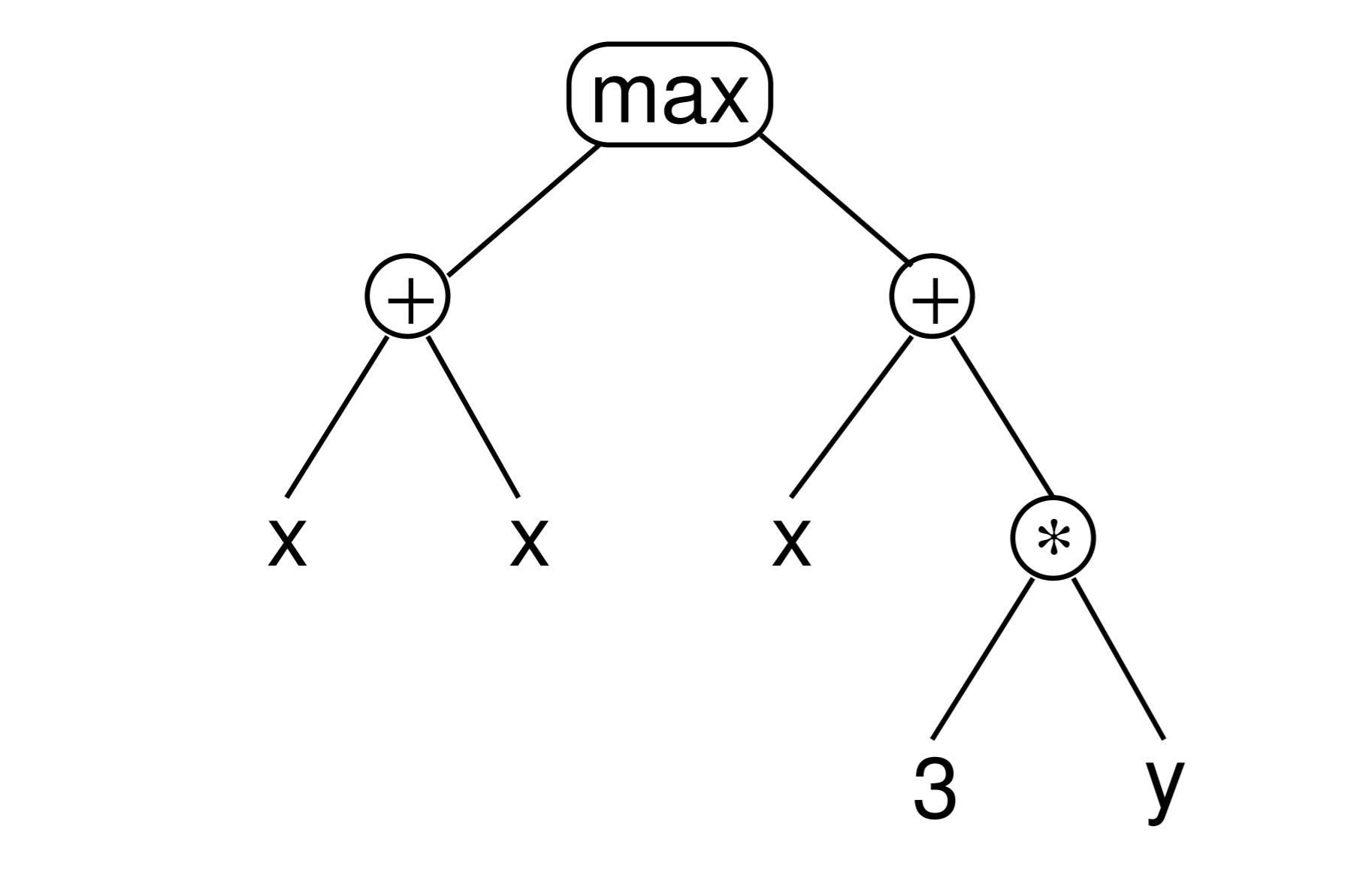

Instead, I required from the program to score each action (there are only 7 available actions each turn), and the highest scored action was the chosen one. This change, from choosing the correct action to just scoring each action, helped simplify the program to a simple + is good, - is bad.

The results showed a significant increase in score, and the end result was a gain of 2.3 rounds. Studying the best program in the final generation, it seems that the final score (~9) represents a basic understanding of the rules of the game. However no strategy/thinking ahead was achieved, it seems that for a truly great performance, a different approach should be taken.

Next Stage

The next stage will be to create a neural network, thus instead of random improvements, I'll try to use gradient decent with the idea that a more controlled evolution process would result in better results. A benchmark for success will be if the program would be able to beat my personal avg. score of ~13.